The Daily Grind is brought to you by:

Damn Gravity: Book for Builders and Entrepreneurs

Good morning!

Welcome to The Daily Grind for Tuesday, August 12.

Today, we’re talking about AI Booms and Busts, and where we fall today.

Let’s get right into it:

📰 One Headline: AI Boom or Bust?

With last week’s release of GPT-5, OpenAI has ushered in AI’s “trough of disillusionment” era—at least according to a featured article on The Information.

GPT 5 was met with reactions ranging from “meh” to true anger. Some thought it lacked confidence and taste, others called it “horrible.”

Rare unforced errors led to CEO Sam Altman publicly apologizing for abruptly turning off old models, chart crime, and more.

The “trough of disillusionment” is a Monty Python-esque term from Gartner’s famous technology hype cycle. After the introduction of a useful technology, it will experience a period of inflated expectations, reaching wild heights, before something (a new model release, in this case) brings everyone crashing back down to earth.

It does feel like we are past the peak of the AI hype. Investment in AI—from VC as well as enterprise racing to keep up—has effectively kept the US economy afloat over the past year, but results have been slower to materialize.

History doesn’t repeat itself, but it rhymes. Inflated expectations around AI are a common refrain. Let’s look back at a few predictions from nearly 40 years ago and see what we can learn.

AI’s Great Expectations…from 1988

On Sunday, Rodney Brooks—the famed MIT robotics professor and co-founder of the company iRobot—published on his blog an op-ed he wrote back in 1988 titled “AI: great expectations.”

The timing of Brooks re-sharing this piece feels intentional. In op-ed, he discusses the AI hype cycle of 1988: neural networks.

These networks incorporate an appealing idea in that instead of having to work out all the details of a task we’ll simply let some randomly organized network of neuron models “learn” through trial and error how to do the right thing. Although neural networks have rarely accomplish (sic) anything beyond a computer simulation, business plans are being cranked out for new start-up companies to apply the technology.

Brooks, at 33 years old, could see that the neural network excitement was just the latest AI hype cycle:

But the current neural networks phenomenon is more than just another set of high expectations. This is the second time around for neural networks. It happened in the early ’60s. In 1962 a distinguished Stanford professor predicted that computer programming would be obsolete by 1963 because, by then, users would simply converse in English with the front-end neural networks. Since then, there have been a few technical improvements, and computers are much faster, broadening the scope of the applicability and likely successes of neural networks. But, again, they really can’t be expected to solve the world’s problems. The old-timers, “immunized” the first time around, seem less enamored than the new converts.

Of course, that Stanford professor’s prediction proved to be correct—just 60 years too early.

Brooks’ skepticism of the 1988 neural network hype seemed well-placed. It would be another decade, with the advent of the GPU, that neural networks really became commercially useful.

Is the Bust Real?

The question for today’s Generative AI hype cycle is whether or the not the technology is actually hitting a bottleneck.

The source of our disillusionment with GPT-5 is the relative lack of progress it made compared to the colossal leaps of GPT-4 and -3 before it. Was GPT-5 just a poorly-planned product launch, or a desperate attempt to keep the hype cycle going in the face of real technology challenges?

I believe the technology bottleneck is real, though this time, energy is the limiting factor, not hardware. The cost of running AI is astronomically high compared to typical internet usage, particularly for coding tasks. AI coding tools are adding rate limits to curb power users, and some AI startups have already shut down due to the costs of running inference.

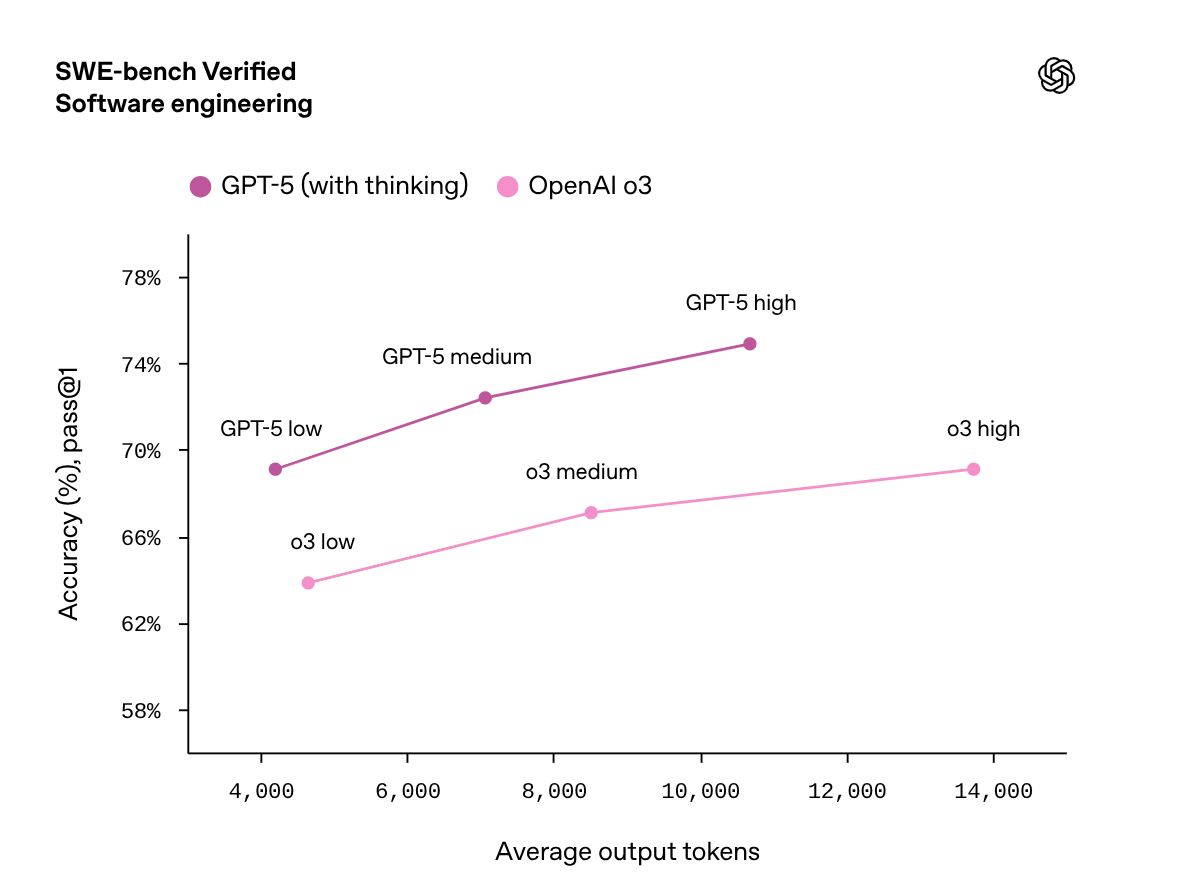

GPT-5’s release is just as much about efficiency as performance. Its smart-switching feature, which chooses the most appropriate model to run based on the query, is an efficiency move. OpenAI also touts GPT-5’s token-efficiency in running engineering tasks compared to previous models:

Models will continue to get more efficient, but efficiency is inherently incremental, where energy capacity could be exponential if we invest in the right areas (spoiler, we’re not).

It’s very possible we are now in the AI trough of disillusionment, and the climb out could take years, maybe decades.

So what comes next?

Opportunity After the Bust

The space between deflated expectations and actual technological progress is where the opportunity will lie for the coming years.

When a hype cycle busts, it feels like the end, but it’s really just the beginning.

Following his 1988 op-ed, Rodney Brooks went on to co-found iRobot in 1990. In 2002, the company invented the Roomba. Today, the robotics industry—powered by AI—is bigger than it’s ever been. Between autonomous vehicles and humanoid warehouse robots, the rest of the world has finally caught up to Brooks’ decades-long obsession. Brooks himself is building Robust.AI, an industrial robotics startup.

Patience is a virtue for Brooks, and we should all remember it when building new technology.

We should also remember Amara’s Law: “We tend to overestimate the effect of a technology in the short run and underestimate the effect in the long run.”

In an April 2025 blog post, Brooks shared his own version of the Law: “Deployment at scale takes so much longer than anyone ever imagines.”

The space between deflated expectations and actual technological progress is where the opportunity will lie for the coming years. The pretenders will disappear, but the real builders will keep building, for however long it takes.

Now that we’re through the peak of inflated expectations, the real progress can begin.

🔗 A Few Good Links

Here are more stories worth exploring today:

The Verge: Notion CEO Ivan Zhao wants you to demand better from your tools [Podcast]

The Verge: Claude can now remember past conversations

Popular Mechanics: Scientists Announce a Physical Warp Drive Is Now Possible. Seriously.

📚 One Page: The Nvidia Way by Tae Kim

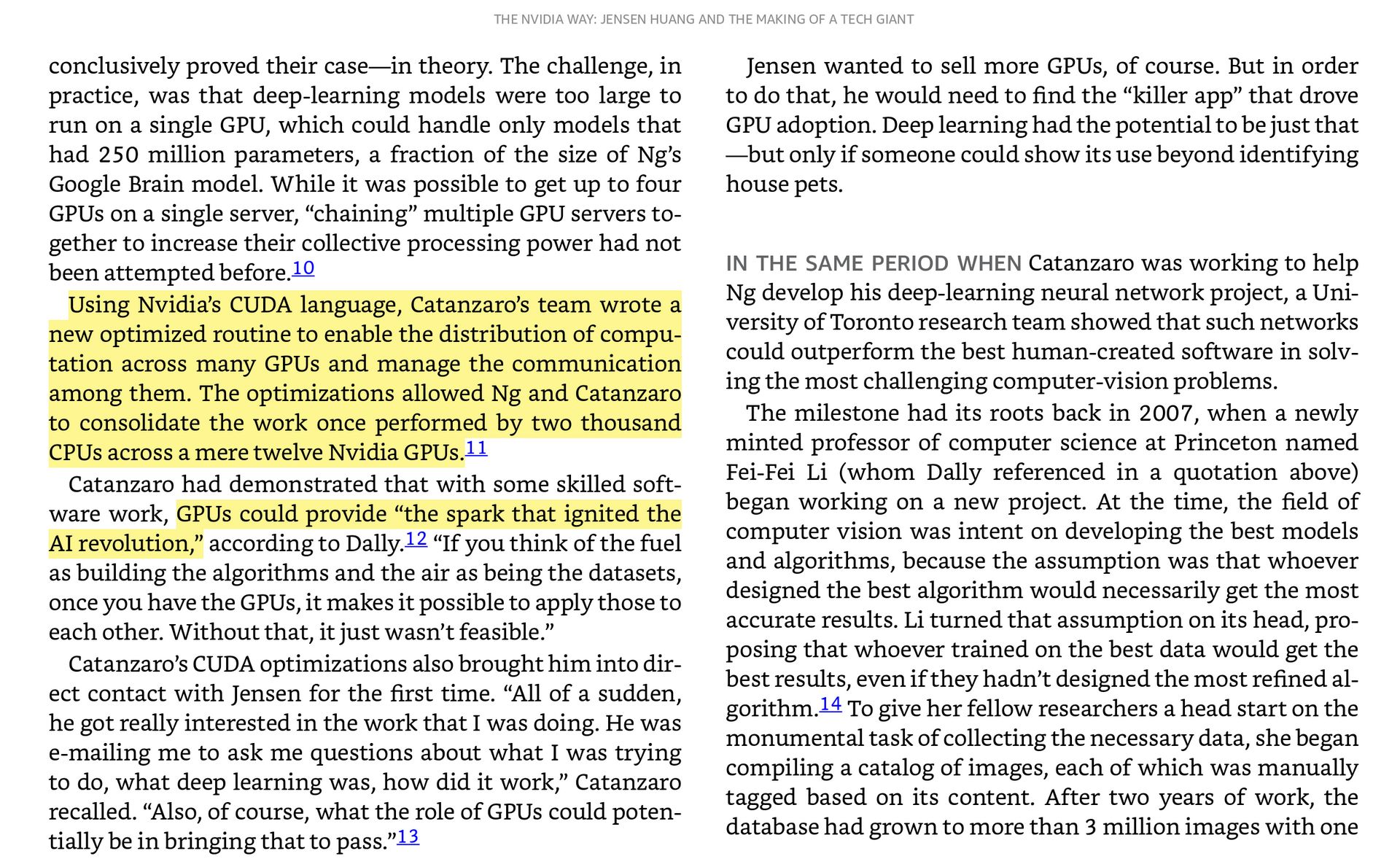

It took decades before the theory of neural networks could become reality—hardware needed to catch up. It was the GPU (graphical processing unit) and its parallel processing ability that eventually supercharged neural networks and our current generative AI boom.

Nvidia, today the world’s most valuable company, invented the GPU and were early to see how they could be used to power AI. Below is a page from Tae Kim’s contemporary history of the company, The Nvidia Way:

The Nvidia Way to be one of the most insightful books on the current technology landscape. Highly recommend:

❓ One Question: What are you overestimating in the short term, but underestimating in the long term?

There’s a corollary to Amara’s Law that applies to individuals:

People overestimate what they can be done in a year, but underestimate what can be done in ten years.

The magic of compounding—compounding technology, experience, success, and wealth—looks marginal for years before it finally takes off. Most people quit before something good has a chance to go exponential.

So your question today: What are you underestimating in the long term?

And what might happen if you stick with it?

🗳️ Wrap Up and Feedback:

That’s it for today’s Daily Grind!

What are your thoughts on the AI hype cycle? Are we in the trough of disillusionment?

What did you think of today's newsletter?

Til tomorrow.

Cheers,

Ben

The Daily Grind is brought to you by:

Damn Gravity: Book for Builders and Entrepreneurs